CFA

Take the online course on MyEducator: SEM Online 3 credit Graduate Course Invitation Video

Confirmatory Factor Analysis (CFA) is the next step after exploratory factor analysis to determine the factor structure of your dataset. In the EFA we explore the factor structure (how the variables relate and group based on inter-variable correlations); in the CFA we confirm the factor structure we extracted in the EFA.

LESSON: Confirmatory Factor Analysis

LESSON: Confirmatory Factor Analysis VIDEO TUTORIAL: CFA part 1

VIDEO TUTORIAL: CFA part 1 VIDEO TUTORIAL: CFA part 2

VIDEO TUTORIAL: CFA part 2

![]() Do you know of some citations that could be used to support the topics and procedures discussed in this section? Please email them to me with the name of the section, procedure, or subsection that they support. Thanks!

Do you know of some citations that could be used to support the topics and procedures discussed in this section? Please email them to me with the name of the section, procedure, or subsection that they support. Thanks!

Model Fit

VIDEO TUTORIAL: Model Fit Thresholds

VIDEO TUTORIAL: Model Fit Thresholds

Model fit refers to how well our proposed model (in this case, the model of the factor structure) accounts for the correlations between variables in the dataset. If we are accounting for all the major correlations inherent in the dataset (with regards to the variables in our model), then we will have good fit; if not, then there is a significant "discrepancy" between the correlations proposed and the correlations observed, and thus we have poor model fit. Our proposed model does not "fit" the observed or "estimated" model (i.e., the correlations in the dataset). Refer to the CFA video tutorial for specifics on how to go about performing a model fit analysis during the CFA.

Metrics

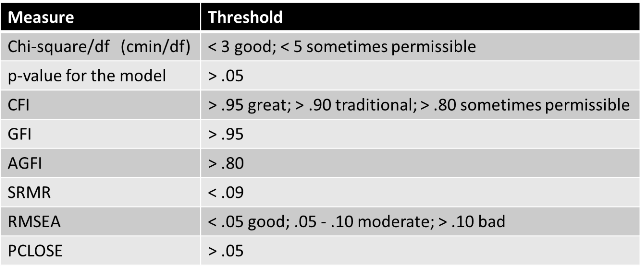

There are specific measures that can be calculated to determine goodness of fit. The metrics that ought to be reported are listed below, along with their acceptable thresholds. Goodness of fit is inversely related to sample size and the number of variables in the model. Thus, the thresholds below are simply a guideline. For more contextualized thresholds, see Table 12-4 in Hair et al. 2010 on page 654. The thresholds listed in the table below are from Hu and Bentler (1999).

Modification indices

Modification indices offer suggested remedies to discrepancies between the proposed and estimated model. In a CFA, there is not much we can do by way of adding regression lines to fix model fit, as all regression lines between latent and observed variables are already in place. Therefore, in a CFA, we look to the modification indices for the covariances. Generally, we should not covary error terms with observed or latent variables, or with other error terms that are not part of the same factor. Thus, the most appropriate modification available to us is to covary error terms that are part of the same factor. The figure below illustrates this guideline - however, there are exceptions. In general, you want to address the largest modification indices before addressing more minor ones. For more information on when it is okay to covary error terms (however, some argue that there are never appropriate reasons to covary errors), refer to David Kenny's thoughts on the matter: David's website, or to the very helpful article: Hermida, R. 2015. "The Problem of Allowing Correlated Errors in Structural Equation Modeling: Concerns and Considerations," Computational Methods in Social Sciences (3:1), p. 5.

Standardized Residual Covariances

Standardized Residual Covariances (SRCs) are much like modification indices; they point out where the discrepancies are between the proposed and estimated models. However, they also indicate whether or not those discrepancies are significant. A significant standardized residual covariance is one with an absolute value greater than 2.58. Significant residual covariances significantly decrease your model fit. Fixing model fit per the residuals matrix is similar to fixing model fit per the modification indices. The same rules apply. For a more specific run-down of how to calculate and locate residuals, refer to the CFA video tutorial. It should be noted however, that in practice, I never address SRCs unless I cannot achieve adequate fit via modification indices, because addressing the SRCs requires the removal of items.

Validity and Reliability

VIDEO TUTORIAL: Testing Validity and Reliability in a CFA

VIDEO TUTORIAL: Testing Validity and Reliability in a CFA

It is absolutely necessary to establish convergent and discriminant validity, as well as reliability, when doing a CFA. If your factors do not demonstrate adequate validity and reliability, moving on to test a causal model will be useless - garbage in, garbage out! There are a few measures that are useful for establishing validity and reliability: Composite Reliability (CR), Average Variance Extracted (AVE), Maximum Shared Variance (MSV), and Average Shared Variance (ASV). The video tutorial will show you how to calculate these values. The thresholds for these values are as follows:

Reliability

- CR > 0.7

Convergent Validity

- AVE > 0.5

Discriminant Validity

- MSV < AVE

- Square root of AVE greater than inter-construct correlations

If you have convergent validity issues, then your variables do not correlate well with each other within their parent factor; i.e, the latent factor is not well explained by its observed variables. If you have discriminant validity issues, then your variables correlate more highly with variables outside their parent factor than with the variables within their parent factor; i.e., the latent factor is better explained by some other variables (from a different factor), than by its own observed variables.

If you need to cite these suggested thresholds, please use the following:

- Hair, J., Black, W., Babin, B., and Anderson, R. (2010). Multivariate data analysis (7th ed.): Prentice-Hall, Inc. Upper Saddle River, NJ, USA.

AVE is a strict measure of convergent validity. Malhotra and Dash (2011) note that "AVE is a more conservative measure than CR. On the basis of CR alone, the researcher may conclude that the convergent validity of the construct is adequate, even though more than 50% of the variance is due to error.” (Malhotra and Dash, 2011, p.702).

- Malhotra N. K., Dash S. (2011). Marketing Research an Applied Orientation. London: Pearson Publishing.

Here is an updated video that uses the most recent Stats Tools Package, which includes a more accurate measure of AVE and CR.

VIDEO TUTORIAL: SEM Series (2016) 5. Confirmatory Factor Analysis Part 2

VIDEO TUTORIAL: SEM Series (2016) 5. Confirmatory Factor Analysis Part 2

Common Method Bias (CMB)

VIDEO TUTORIAL: Zero-constraint approach to CMB

VIDEO TUTORIAL: Zero-constraint approach to CMB- REF: Podsakoff, P.M., MacKenzie, S.B., Lee, J.Y., and Podsakoff, N.P. "Common method biases in behavioral research: a critical review of the literature and recommended remedies," Journal of Applied Psychology (88:5) 2003, p 879.

Common method bias refers to a bias in your dataset due to something external to the measures. Something external to the question may have influenced the response given. For example, collecting data using a single (common) method, such as an online survey, may introduce systematic response bias that will either inflate or deflate responses. A study that has significant common method bias is one in which a majority of the variance can be explained by a single factor. To test for a common method bias you can do a few different tests. Each will be described below. For a step by step guide, refer to the video tutorials.

Harman’s single factor test

- It should be noted that the Harman's single factor test is no longer widely accepted and is considered an outdated and inferior approach.

A Harman's single factor test tests to see if the majority of the variance can be explained by a single factor. To do this, constrain the number of factors extracted in your EFA to be just one (rather than extracting via eigenvalues). Then examine the unrotated solution. If CMB is an issue, a single factor will account for the majority of the variance in the model (as in the figure below).

Common Latent Factor

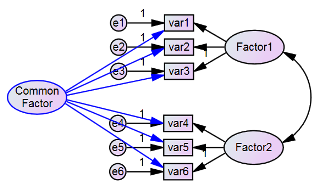

This method uses a common latent factor (CLF) to capture the common variance among all observed variables in the model. To do this, simply add a latent factor to your AMOS CFA model (as in the figure below), and then connect it to all observed items in the model. Then compare the standardised regression weights from this model to the standardized regression weights of a model without the CLF. If there are large differences (like greater than 0.200) then you will want to retain the CLF as you either impute composites from factor scores, or as you move in to the structural model. The CLF video tutorial demonstrates how to do this. This approach is taken in this article:

- Serrano Archimi, C., Reynaud, E., Yasin, H.M. and Bhatti, Z.A. (2018), “How perceived corporate social responsibility affects employee cynicism: the mediating role of organizational trust”, Journal of Business Ethics, Vol. 151 No. 4, pp. 907-921.

Marker Variable

This method is simply an extended, and more accurate way to do the common latent factor method. For this method, just add another latent factor to the model (as in the figure below), but make sure it is something that you would not expect to correlate with the other latent factors in the model (i.e., the observed variables for this new factor should have low, or no, correlation with the observed variables from the other factors). Then add the common latent factor. This method teases out truer common variance than the basic common latent factor method because it is finding the common variance between unrelated latent factors. Thus, any common variance is likely due to a common method bias, rather than natural correlations. This method is demonstrated in the common method bias video tutorial.

Zero and Equal Constraints

VIDEO TUTORIAL: Specific Bias 2017

VIDEO TUTORIAL: Specific Bias 2017

The most current and best approach is outlined below. Here is an article that recommends this approach (pg. 20):

- Do an EFA, and make sure to include “marker” or specific bias (SB) constructs.

- Specific bias constructs are just like any other multi-item constructs but measure specific sources of bias that may account for shared variance not due to a causal relationship between key variables in the study. A common one is Social Desirability Bias.

- Do the CFA with SB constructs covaried to other constructs (this looks like a normal CFA).

- Assess and adjust to achieve adequate goodness of fit

- Assess and adjust to achieve adequate validity and reliability

- Add a common latent factor (CLF), sometimes called an unmeasured method factor.

- Make sure the CLF is connected to all observed items, including the items of the SB constructs.

- If this breaks your model, then remove the CLF and proceed with the steps below.

- If it does not break your model, then in the steps below, keep the SB constructs covaried to the other latent factors, and keep the CLF connected with regression lines to all observed factors.

- The steps below assume the CLF will break the model, so some instructions that say to connect the SB to all observed variables should instead be CLF to all observed variables (if the CLF did not break your model).

- Then conduct the CFA with the SB constructs shown to influence ALL indicators of other constructs in the study. Do not correlate the SB constructs with the other constructs of study. If there is more than one SB construct, they follow the same approach and can correlate with each other.

- Retest validity, but be willing to accept lower thresholds.

- If change in AVE is extreme (e.g., >.300) then there is too much shared variance attributable to a response variable. This means that variable is compromised and any subsequent analysis with it may be biased.

- If the majority of factors have extreme changes to their AVE, you might consider rethinking your data collection instrument and how to reduce specific response biases.

- If the validities are still sufficient, then conduct the zero-constrained test. This test determines whether the response bias is any different from zero.

- To do this, constrain all paths from the SB constructs to all indicators (but do not constrain their own) to zero. Then conduct a chi-square difference test between the constrained and unconstrained models.

- If the null hypothesis cannot be rejected (i.e., the constrained and unconstrained models are the same or "invariant"), you have demonstrated that you were unable to detect any specific response bias affecting your model. You can move on to causal modeling, but make sure to retain the SB construct(s) to include as control in the causal model. See the bottom of this subsection for how to do this.

- If you changed your model while testing for specific bias, you should retest validities and model fit with this final (unconstrained) measurement model, as it may have changed.

- If the zero-constrained chi-square difference test resulted in a significant result (i.e., reject null, i.e., response bias is not zero), then you should run an equal-constrained test. This test determines whether the response bias is evenly distributed across factors.

- To do this, constrain all paths from the SB construct to all indicators (not including their own) to be equal. There are multiple ways to do this. One easy way is simply to name them all the same thing (e.g., "aaa").

- If the chi-square difference test between the constrained (to be equal) and unconstrained models indicates invariance (i.e., fail to reject null - that they are equal), then the bias is equally distributed. Make note of this in your report. e.g., "A test of equal specific bias demonstrated evenly distributed bias."

- Move on to causal modeling with the SB constructs retained (keep them).

- If the chi-square test is significant (i.e., unevenly distributed bias), which is more common, you should still retain the SB construct for subsequent causal analyses. Make note of this in your report. e.g., "A test of equal specific bias demonstrated unevenly distributed bias."

- What to do if you have to retain the specific bias factor

- You can do this either by imputing factor scores while the SB construct is connected to all observed variables (thereby essentially parceling out the shared bias with the SB construct), and then exclude from your causal model the SB construct that was imputed during your measurement model. Or you can disconnect the SB construct from all your observed variables, but covary it with all your latent variables, and then impute factor scores. If taking this latter approach, the SB will not be parceled out, so you will then need to include the factor score for the SB construct in the causal model as a control variable, connected to all other variables. If you also are able to retain the CLF (i.e., it does not break your model), then you keep it while imputing. If you have only connected the CLF to the observed variables (and not the SB construct), then make sure to use the SB construct as a control variable in the causal model.

Measurement Model Invariance

VIDEO TUTORIAL: Measurement Model Invariance (using Name Parameters tool)

VIDEO TUTORIAL: Measurement Model Invariance (using Name Parameters tool) VIDEO TUTORIAL: Measurement Model Invariance (using MGA Manager)

VIDEO TUTORIAL: Measurement Model Invariance (using MGA Manager)

Before creating composite variables for a path analysis, configural, metric, and scalar invariance should be tested during the CFA to validate that the factor structure and loadings are sufficiently equivalent across groups, otherwise your composite variables will not be very useful (because they are not actually measuring the same underlying latent construct for both groups).

Configural

Configural invariance tests whether the factor structure represented in your CFA achieves adequate fit when both groups are tested together and freely (i.e., without any cross-group path constraints). To do this, simply build your measurement model as usual, create two groups in AMOS (e.g., male and female), and then split the data along gender. Next, attend to model fit as usual (here’s a reminder: Model Fit). If the resultant model achieves good fit, then you have configural invariance. If you don’t pass the configural invariance test, then you may need to look at the modification indices to improve your model fit or to see how to restructure your CFA.

Metric

If we pass the test of configural invariance, then we need to test for metric invariance. To test for metric invariance, simply perform a chi-square difference test on the two groups just as you would for a structural model. The evaluation is the same as in the structural model invariance test: if you have a significant p-value for the chi-square difference test, then you have evidence of differences between groups, otherwise, they are invariant and you may proceed to make your composites from this measurement model (but make sure you use the whole dataset when you create composites, instead of using the split dataset). If there is a difference between groups, you'll want to find which factors are different (do this one at a time as demonstrated in the video above). Make sure you place the factor constraint of 1 on the factor variance, rather than on the indicator paths (as shown in the video).

Scalar

If we pass metric invariance, we need to then assess scalar invariance. This can be done as shown in the video above. Essentially you need to assess whether intercepts and structural covariances are equivalent across groups. This is done the same as with metric invariance, but with the test being done on intercepts and structural covariances instead of measurement weights. Keep constraints the same, but for each factor, for one of the groups, make the variance constraint = 1. This can be done in the Manage Models section of AMOS.

Contingency Plans

If you do not achieve invariant models, here are some appropriate approaches in the order I would attempt them.

- 1. Modification indices: Fit the model for each group using the unconstrained measurement model. You can toggle between groups when looking at modification indices. So, for example, for males, there might be a high MI for the covariance between e1 and e2, but for females this might not be the case. Go ahead and address those covariances appropriately for both groups. When deleting an item, it does it for both groups. If fitting the model this way does not solve your invariance issues, then you will need to look at differences in regression weights.

- 2. Regression weights: You need to figure out which item or items are causing the trouble (i.e., which ones do not measure the same across groups). The cause of the lack of invariance is most likely due to one of two things: the strength of the loading for one or more items differs significantly across groups, or, an item or two load better on a factor other than their own for one or more groups. To address the first issue, just look at the standardized regression weights for each group to see if there are any major differences (just eyeball it). If you find a regression weight that is exceptionally different (for example, item2 on Factor 3 has a loading of 0.34 for males and 0.88 for females), then you may need to remove that item if possible. Retest and see if invariance issues are solved. If not, try addressing the second issue (explained next).

- 3. Standardized Residual Covariances: To address the second issue, you need to analyze the standardized residual covariances (check the residual moments box in the output tab). I talk about this a little bit in my video called “Model fit during a Confirmatory Factor Analysis (CFA) in AMOS” around the 8:35 mark. This matrix can also be toggled between groups. Here is a small example for CSRs and BCRs. We observe that for the BCR group rd3 and q5 have high standardized residual covariances with sw1. So, we could remove sw1 and see if that fixes things, but SW only has three items right now, so another option is to remove rd3 or q5 and see if that fixes things, and if not, then return to this matrix after rerunning things, and see if there are any other issues. Remove items sparingly, and only one at a time, trying your best to leave at least three items with each factor, although two items will also sometimes work if necessary (two just becomes unstable). If you still have issues, then your groups are exceptionally different… This may be due to small sample size for one of the groups. If such is the case, then you may have to list that as a limitation and just move on.

2nd Order Factors

VIDEO TUTORIAL: Handling 2nd Order Factors

VIDEO TUTORIAL: Handling 2nd Order Factors

Handling 2nd order factors in AMOS is not difficult, but it is tricky. And, if you don't get it right, it won't run. The pictures below offer a simple example of how you would model a 2nd order factor in a measurement model and in a structural model. The YouTube video tutorial above demonstrates how to handle 2nd order factors, and explains how to report them.

Common CFA Problems

1. CFA that reaches iteration limit.

- Here is a video: *

VIDEO TUTORIAL: Iteration limit reached in AMOS

VIDEO TUTORIAL: Iteration limit reached in AMOS

2. CFA that shows CMB = 0 (sometimes happens when paths from CLF are constrained to be equal)

- The best approach to CMB is just to not constrain them to be equal. Instead, it is best to do a chi-square difference test between the unconstrained model (with CLF and marker if available) and the same model but with all paths from the CLF constrained to zero. This tells us whether the common variance is different from zero.

3. CFA with negative error variances

- This shouldn’t happen if all data screening and EFA worked out well, but it still happens… In such cases, it is permitted to constrain the error variance to a small positive number (e.g., 0.001)

4. CFA with negative error covariances (sometimes shows up as “not positive definite”)

- In such cases, there is usually a measurement issue deeper down (like skewness or kurtosis, or too much missing data, or a variable that is nominal). If it cannot be fixed by addressing these deeper down issues, then you might be able to correct it by moving the latent variable path constraint (usually 1) to another path. Usually this issue accompanies the negative error variance, so we can usually fix it by fixing the negative error variance first.

5. CFA with Heywood cases

- This often happens when we have only two items for a latent variable, and one of them is very dominant. First try moving the latent variable path constraint to a different path. If this doesn’t work then, move the path constraint up to the latent variable variance constraint AND constrain the paths to be equal (by naming them both the same thing, like “aaa”).

VIDEO TUTORIAL: AMOS Heywood Case

VIDEO TUTORIAL: AMOS Heywood Case

6. CFA with discriminant validity issues

- This shouldn’t happen if the EFA solution was satisfactory. However, it still happens sometimes when two latent factors are strongly correlated. This strong correlation is a sign of overlapping traits. For example, confidence and self-efficacy. These two traits are too similar. Either one could be dropped, or you could create a 2nd order factor out of them:

VIDEO TUTORIAL: Handling 2nd Order Factors

VIDEO TUTORIAL: Handling 2nd Order Factors

7. CFA with “missing constraint” error

- Sometimes the CFA will say you need to impose 1 additional constraint (sometimes it says more than this). This is usually caused by drawing the model incorrectly. Check to see if all latent variables have a single path constrained to 1 (or the latent variable variance constrained to 1).