EFA

Take the online course on MyEducator: SEM Online 3 credit Graduate Course Invitation Video

Exploratory Factor Analysis (EFA) is a statistical approach for determining the correlation among the variables in a dataset. This type of analysis provides a factor structure (a grouping of variables based on strong correlations). In general, an EFA prepares the variables to be used for cleaner structural equation modeling. An EFA should always be conducted for new datasets. The beauty of an EFA over a CFA (confirmatory) is that no a priori theory about which items belong to which constructs is applied. This means the EFA will be able to spot problematic variables much more easily than the CFA. A critical assumption of the EFA is that it is only appropriate for sets of non-nominal items which theoretically belong to reflective latent factors. Categorical/nominal variables (e.g., marital status, gender) should not be included. Formative measures should not be included. Very rarely should objective (rather than perceptual) variables be included, as objective variables rarely belong to reflective latent factors.

VIDEO TUTORIAL: How to do an EFA

VIDEO TUTORIAL: How to do an EFA

LESSON: Exploratory Factor Analysis

LESSON: Exploratory Factor Analysis

![]() Do you know of some citations that could be used to support the topics and procedures discussed in this section? Please email them to me with the name of the section, procedure, or subsection that they support. Thanks!

Do you know of some citations that could be used to support the topics and procedures discussed in this section? Please email them to me with the name of the section, procedure, or subsection that they support. Thanks!

Rotation types

Rotation causes factor loadings to be more clearly differentiated, which is often necessary to facilitate interpretation. Several types of rotation are available for your use.

Orthogonal

Varimax (most common)

- minimizes number of variables with extreme loadings (high or low) on a factor

- makes it possible to identify a variable with a factor

Quartimax

- minimizes the number of factors needed to explain each variable

- tends to generate a general factor on which most variables load with medium to high values

- not very helpful for research

Equimax

- combination of Varimax and Quartimax

Oblique

The variables are assessed for the unique relationship between each factor and the variables (removing relationships that are shared by multiple factors).

Direct oblimin (DO)

- factors are allowed to be correlated

- diminished interpretability

Promax (Use this one if you're not sure)

- computationally faster than DO

- used for large datasets

Factoring methods

There are three main methods for factor extraction.

Principal Component Analysis (PCA)

Use for a softer solution

- Considers all of the available variance (common + unique) (places 1’s on diagonal of correlation matrix).

- Seeks a linear combination of variables such that maximum variance is extracted—repeats this step.

- Use when there is concern with prediction, parsimony and you know the specific and error variance are small.

- Results in orthogonal (uncorrelated factors).

Principal Axis Factoring (PAF)

- Considers only common variance (places communality estimates on diagonal of correlation matrix).

- Seeks least number of factors that can account for the common variance (correlation) of a set of variables.

- PAF is only analyzing common factor variability; removing the uniqueness or unexplained variability from the model.

- PAF is preferred because it accounts for co-variation, whereas PCA accounts for total variance.

Maximum Likelihood (ML)

Use this method if you are unsure

- Maximizes differences between factors. Provides Model Fit estimate.

- This is the approach used in AMOS, so if you are going to use AMOS for CFA and structural modeling, you should use this one during the EFA.

Appropriateness of data (adequacy)

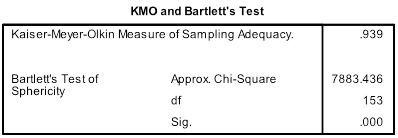

KMO Statistics

- Marvelous: .90s

- Meritorious: .80s

- Middling: .70s

- Mediocre: .60s

- Miserable: .50s

- Unacceptable: <.50

Bartlett’s Test of Sphericity

Tests hypothesis that correlation matrix is an identity matrix.

- Diagonals are ones

- Off-diagonals are zeros

A significant result (Sig. < 0.05) indicates matrix is not an identity matrix; i.e., the variables do relate to one another enough to run a meaningful EFA.

Communalities

A communality is the extent to which an item correlates with all other items. Higher communalities are better. If communalities for a particular variable are low (between 0.0-0.4), then that variable may struggle to load significantly on any factor. In the table below, you should identify low values in the "Extraction" column. Low values indicate candidates for removal after you examine the pattern matrix.

Factor Structure

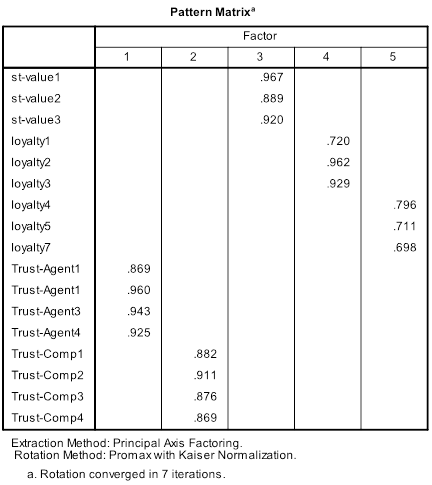

Factor structure refers to the intercorrelations among the variables being tested in the EFA. Using the pattern matrix below as an illustration, we can see that variables group into factors - more precisely, they "load" onto factors. The example below illustrates a very clean factor structure in which convergent and discriminant validity are evident by the high loadings within factors, and no major cross-loadings between factors (i.e., a primary loading should be at least 0.200 larger than secondary loading).

Convergent validity

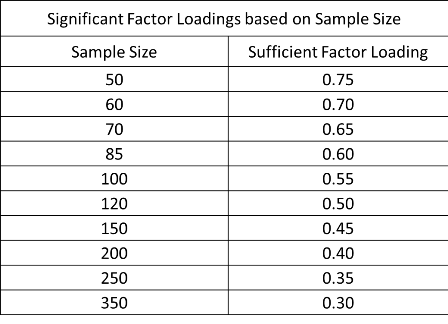

Convergent validity means that the variables within a single factor are highly correlated. This is evident by the factor loadings. Sufficient/significant loadings depend on the sample size of your dataset. The table below outlines the thresholds for sufficient/significant factor loadings. Generally, the smaller the sample size, the higher the required loading. We can see that in the pattern matrix above, we would need a sample size of 60-70 at a minimum to achieve significant loadings for variables loyalty1 and loyalty7. Regardless of sample size, it is best to have loadings greater than 0.500 and averaging out to greater than 0.700 for each factor. The table below is Table 3-2 from page 117 of Hair et al (2010).

Discriminant validity

Discriminant validity refers to the extent to which factors are distinct and uncorrelated. The rule is that variables should relate more strongly to their own factor than to another factor. Two primary methods exist for determining discriminant validity during an EFA. The first method is to examine the pattern matrix. Variables should load significantly only on one factor. If "cross-loadings" do exist (variable loads on multiple factors), then the cross-loadings should differ by more than 0.2. The second method is to examine the factor correlation matrix, as shown below. Correlations between factors should not exceed 0.7. A correlation greater than 0.7 indicates a majority of shared variance (0.7 * 0.7 = 49% shared variance). As we can see from the factor correlation matrix below, factor 2 is too highly correlated with factors 1, 3, and 4.

What if you have discriminant validity problems - for example, the items from two theoretically different factors end up loading on the same extracted factor (instead of on separate factors). I have found the best way to resolve this type of issue is to do a separate EFA with just the items from the offending factors. Work out this smaller EFA (by removing items one at a time that have the worst cross-loadings), then reinsert the remaining items into the full EFA. This will usually resolve the issue. If it doesn't, then consider whether these two factors are actually just two dimensions or manifestations of some higher order factor. If this is the case, then you might consider doing the EFA for this higher order factor separate from all the items belonging to first order factors. Then during the CFA, make sure to model the higher order factor properly by making a 2nd order latent variable.

Face validity

Face validity is very simple. Do the factors make sense? For example, are variables that are similar in nature loading together on the same factor? If there are exceptions, are they explainable? Factors that demonstrate sufficient face validity should be easy to label. For example, in the pattern matrix above, we could easily label factor 1 "Trust in the Agent" (assuming the variable names are representative of the measure used to collect data for this variable). If all the "Trust" variables in the pattern matrix above loaded onto a single factor, we may have to abstract a bit and call this factor "Trust" rather than "Trust in Agent" and "Trust in Company".

Reliability

Reliability refers to the consistency of the item-level errors within a single factor. Reliability means just what it sounds like: a "reliable" set of variables will consistently load on the same factor. The way to test reliability in an EFA is to compute Cronbach's alpha for each factor. Cronbach's alpha should be above 0.7; although, ceteris paribus, the value will generally increase for factors with more variables, and decrease for factors with fewer variables. Each factor should aim to have at least 3 variables, although 2 variables is sometimes permissible.

Formative vs. Reflective

Specifying formative versus reflective constructs is a critical preliminary step prior to further statistical analysis. The indicators for reflective constructs ar required to be correlated, whereas the indicators for formative constructs are not required to be correlated. As such, formative constructs should not be expected to properly factor in an EFA, and cannot be modeled appropriately in AMOS during a CFA. If you need to work with formative factors, either use a Partial Least Squares approach (see PLS section), or create a score (new variable) for each set of formative indicators. This score could be an average or a sum, or some sort of weighted scoring. Here is how you know whether you're working with formative or reflective constructs:

Formative

- Direction of causality is from measure to construct

- No reason to expect the measures are correlated

- Indicators are not interchangeable

Reflective

- Direction of causality is from construct to measure

- Measures expected to be correlated

- Indicators are interchangeable

An example of formative versus reflective constructs is given in the figure below.

Common EFA Problems

1. EFA that results in too many or too few factors (contrary to expected number of factors).

- This happens all the time when you extract based on eigenvalues. I encourage students to use eigenvalues first, but then also to try constraining to the exact number of expected factors. Concerns arise when the eigenvalues extract fewer than expected, so constraining ends up extracting factors with very low eigenvalues (and therefore not very useful factors).

2. EFA with low communalities for some items.

- This is a sign of low correlation and is usually corroborated by a low pattern matrix loading. I tell students not to remove an item just because of a low communality, but to watch it carefully throughout the rest of the EFA.

3. EFA with a 2nd order construct involved, as well as several first order constructs.

- Often when there is a 2nd order factor in an EFA, the subdimensions of that factor will all load together, instead of in separate factors. In such cases, I recommend doing a separate EFA for the items of that 2nd order factor (use Promax and Principal Components Analysis). Then, if that EFA results in removing some items to achieve discriminant validity, you can try putting the EFA back together with the remaining items (although it still might not work). Then, during the CFA, be sure to properly model the 2nd order factor with an additional latent variable connected to its sub-factors.

4. EFA with Heywood cases

- Sometimes loadings are greater than 1.00. I don’t address these until I’ve addressed all other problems. Once I have a good EFA solution, then if the Heywood case is still there (usually it resolves itself), then I try a different rotation method (Varimax will fix it every time).

Some Thoughts on Messy EFAs

Let us say that you are doing an EFA and your pattern matrix ends up a mess. Let’s say that the items from one or two constructs do not load as expected no matter how you manipulate the EFA. What can you do about it? There is no right answer (this is statistics after all), but you do have a few options:

1. You can remove those constructs from the model and move forward without them.

- This option is not recommended as it is usually the last course of action to take. You should always do everything in your power to retain constructs that are key to your theory.

2. You can run the EFA using a more exploratory approach without regard to expected loadings. For example, if you expected item foo3 to load with items foo1 and foo2, but instead it loaded with items moo1-3, then you should just let it. Then rename your factors according to what loaded on them.

- This option is acceptable, but will lead you to produce a model that is probably somewhat different from the one you had expected to end up with.

3. You can say to yourself, “Why am I doing an EFA? These are established scales and I already know which items belong to which constructs (theoretically). I do not need to explore the relationships between the items because I already know the relationships. So shouldn’t I be doing a CFA instead – to confirm these expectations?” And then you would simply jump to the CFA first to refine your measurement model (but then you return to your EFA after your CFA).

- Surveys are usually built with a priori constructs and theory in mind – or surveys are built from existing scales that have been validated in previous literature. Thus, we are less inclined to “explore” and more inclined to “confirm” when doing factor analysis. The point of a factor analysis is to show that you have distinct constructs (discriminant validity) that each measures a single thing (convergent validity), and that are reliable (reliability). This can all be achieved in the CFA. However, you should then go back to the EFA and "confirm" the CFA in the EFA by setting up the EFA as your CFA turned out.

Why do I bring this up? Mainly because your EFAs are nearly always going to run messy, and because you can endlessly mess around with an EFA and if you believe everything your EFA is telling you, you will end up throwing away items and constructs unnecessarily and thus you will end up letting statistics drive your theory, instead of letting theory drive your theory. EFAs are exploratory and they can be treated as such. We want to retain as much as possible and still be producing valid results. I don’t know if this is emphasized enough in our quant courses. I also bring this up because I ran an EFA recently and got something I could not salvage without hacking a couple constructs. However, after running the CFA with the full model (ignoring the EFA), I was able to retain all constructs by only removing a few items (and not the ones I expected based on the EFA!). I now have excellent reliability, convergent validity, and only a minor issue with discriminant validity that I’m willing to justify for the greater good of the model. I can now go back and reconcile my CFA with an EFA.

For a very rocky but successful demonstration of handling a troublesome EFA, watch my SEM Boot Camp 2014 Day 3 Afternoon Video towards the end. The link below will start you at the right time position. In this video, I take one of the seminar participant's data, which I had never seen before, and with which he had been unable to arrive at a clean EFA, and I struggle through it until we arrive at something valid and usable.

VIDEO TUTORIAL: Tackling a Messy EFA'

VIDEO TUTORIAL: Tackling a Messy EFA'