Data Prep

Take the online course on MyEducator: SEM Online 3 credit Graduate Course Invitation Video

LESSON: Data Screening

LESSON: Data Screening VIDEO TUTORIAL: Data Screening

VIDEO TUTORIAL: Data Screening

Data screening (sometimes referred to as "data screaming") is the process of ensuring your data is clean and ready to go before you conduct further statistical analyses. Data must be screened in order to ensure the data is useable, reliable, and valid for testing causal theory. In this section I will focus on six specific issues that need to be addressed when cleaning (not cooking) your data.

![]() Do you know of some citations that could be used to support the topics and procedures discussed in this section? Please email them to me with the name of the section, procedure, or subsection that they support. Thanks!

Do you know of some citations that could be used to support the topics and procedures discussed in this section? Please email them to me with the name of the section, procedure, or subsection that they support. Thanks!

Missing Data

If you are missing much of your data, this can cause several problems. The most apparent problem is that there simply won't be enough data points to run your analyses. The EFA, CFA, and path models require a certain number of data points in order to compute estimates. This number increases with the complexity of your model. If you are missing several values in your data, the analysis just won't run.

Additionally, missing data might represent bias issues. Some people may not have answered particular questions in your survey because of some common issue. For example, if you asked about gender, and females are less likely to report their gender than males, then you will have male-biased data. Perhaps only 50% of the females reported their gender, but 95% of the males reported gender. If you use gender in your causal models, then you will be heavily biased toward males, because you will not end up using the unreported responses.

To find out how many missing values each variable has, in SPSS go to Analyze, then Descriptive Statistics, then Frequencies. Enter the variables in the variables list. Then click OK. The table in the output will show the number of missing values for each variable.

The threshold for missing data is flexible, but generally, if you are missing more than 10% of the responses on a particular variable, or from a particular respondent, that variable or respondent may be problematic. There are several ways to deal with problematic variables.

- Just don't use that variable.

- If it makes sense, impute the missing values. This should only be done for continuous or interval data (like age or Likert-scale responses), not for categorical data (like gender).

- If your dataset is large enough, just don't use the responses that had missing values for that variable. This may create a bias, however, if the number of missing responses is greater than 10%.

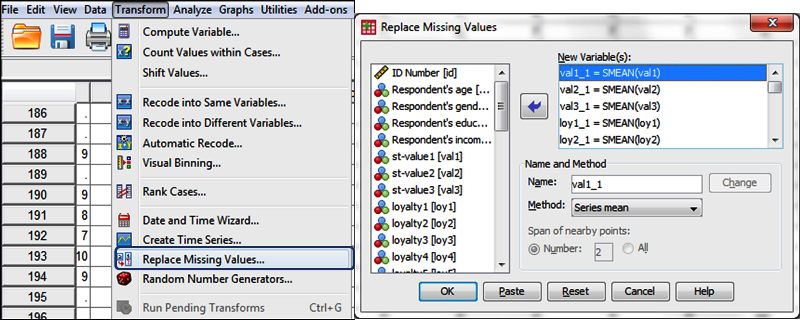

To impute values in SPSS, go to Transform, Replace Missing Values; then select the variables that need imputing, and hit OK. See the screenshots below. In this screenshot, I use the Mean replacement method. But there are other options, including Median replacement. Typically with Likert-type data, you want to use median replacement, because means are less meaningful in these scenarios. For more information on when to use which type of imputation, refer to: Lynch (2003)

Handling problematic respondents is somewhat more difficult. If a respondent did not answer a large portion of the questions, their other responses may be useless when it comes to testing causal models. For example, if they answered questions about diet, but not about weight loss, for this individual we cannot test a causal model that argues that diet has a positive effect on weight loss. We simply do not have the data for that person. My recommendation is to first determine which variables will actually be used in your model (often we collect data on more variables than we actually end up using in our model), then determine if the respondent is problematic. If so, then remove that respondent from the analysis.

Outliers

Outliers can influence your results, pulling the mean away from the median. Two types of outliers exist: outliers for individual variables, and outliers for the model.

Univariate

VIDEO TUTORIAL: Detecting Univariate Outliers

VIDEO TUTORIAL: Detecting Univariate Outliers

To detect outliers on each variable, just produce a boxplot in SPSS (as demonstrated in the video). Outliers will appear at the extremes, and will be labeled, as in the figure below. If you have a really high sample size, then you may want to remove the outliers. If you are working with a smaller dataset, you may want to be less liberal about deleting records. However, this is a trade-off, because outliers will influence small datasets more than large ones. Lastly, outliers do not really exist in Likert-scales. Answering at the extreme (1 or 5) is not really representative outlier behavior.

Another type of outlier is an unengaged respondent. Sometimes respondents will enter '3, 3, 3, 3,...' for every single survey item. This participant was clearly not engaged, and their responses will throw off your results. Other patterns indicative of unengaged respondents are '1, 2, 3, 4, 5, 1, 2, ...' or '1, 1, 1, 1, 5, 5, 5, 5, 1, 1, ...'. There are multiple ways to identify and eliminate these unengaged respondents:

- Include attention traps that request the respondent to "answer somewhat agree for this item if you are paying attention". I usually include two of these in opposite directions (i.e., one says somewhat agree and one says somewhat disagree) at about a third and two-thirds of the way through my surveys. I am always astounded at how many I catch this way...

- See if the participant answered reverse-coded questions in the same direction as normal questions. For example, if they responded strongly agree to both of these items, then they were not paying attention: "I am very hungry", "I don't have much appetite right now".

Multivariate

VIDEO TUTORIAL: Detecting Multivariate Influential Outliers

VIDEO TUTORIAL: Detecting Multivariate Influential Outliers

Multivariate outliers refer to records that do not fit the standard sets of correlations exhibited by the other records in the dataset, with regards to your causal model. So, if all but one person in the dataset reports that diet has a positive effect on weight loss, but this one guy reports that he gains weight when he diets, then his record would be considered a multivariate outlier. To detect these influential multivariate outliers, you need to calculate the Mahalanobis d-squared. This is a simple matter in AMOS. See the video tutorial for the particulars. As a warning however, I almost never address multivariate outliers, as it is very difficult to justify removing them just because they don't match your theory. Additionally, you will nearly always find multivariate outliers, even if you remove them, more will show up. It is a slippery slope.

A more conservative approach that I would recommend is to examine the influential cases indicated by the Cook's distance. Here is a video explaining what this is and how to do it. This video also discusses multicollinearity.

VIDEO TUTORIAL: Multivariate Assumptions

VIDEO TUTORIAL: Multivariate Assumptions

Normality

VIDEO TUTORIAL: Detecting Normality Issues

VIDEO TUTORIAL: Detecting Normality Issues

Normality refers to the distribution of the data for a particular variable. We usually assume that the data is normally distributed, even though it usually is not! Normality is assessed in many different ways: shape, skewness, and kurtosis (flat/peaked).

- Shape: To discover the shape of the distribution in SPSS, build a histogram (as shown in the video tutorial) and plot the normal curve. If the histogram does not match the normal curve, then you likely have normality issues. You can also look at the boxplot to determine normality.

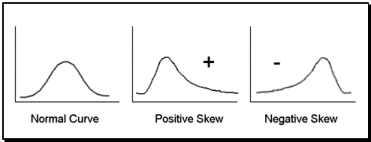

- Skewness: Skewness means that the responses did not fall into a normal distribution, but were heavily weighted toward one end of the scale. Income is an example of a commonly right skewed variable; most people make between 20 and 70 thousand dollars in the USA, but there is smaller group that makes between 70 and 100, and an even smaller group that makes between 100 and 150, and a much smaller group that makes between 150 and 250, etc. all the way up to Bill Gates and Mark Zuckerberg. Addressing skewness may require transformations of your data (if continuous), or removing influential outliers. There are two rules on Skewness:

- (1)If your skewness value is greater than 1 then you are positive (right) skewed, if it is less than -1 you are negative (left) skewed, if it is in between, then you are fine. Some published thresholds are a bit more liberal and allow for up to +/-2.2, instead of +/-1.

- (2)If the absolute value of the skewness is less than three times the standard error, then you are fine; otherwise you are skewed.

Using these rules, we can see from the table below, that all three variables are fine using the first rule, but using the second rule, they are all negative (left) skewed.

Skewness looks like this:

- Kurtosis:

Kurtosis refers to the outliers of the distribution of data. Data that have outliers have large kurtosis. Data without outliers have low kurtosis. The kurtosis (excess kurtosis) of the normal distribution is 0. The rule for evaluating whether or not your kurtosis is problematic is the same as rule two above:

- If the absolute value of the kurtosis is less than three times the standard error, then the kurtosis is not significantly different from that of the normal distribution; otherwise you have kurtosis issues. Although a looser rule is an overall kurtosis score of 2.200 or less (rather than 1.00) (Sposito et al., 1983).

Kurtosis looks like this:

- Bimodal:

One other issue you may run into with the distribution of your data is a bimodal distribution. This means that the data has multiple (two) peaks, rather than peaking at the mean. This may indicate there are moderating variables effecting this data. A bimodal distribution looks like this:

- Transformations:

VIDEO TUTORIAL: Transformations

VIDEO TUTORIAL: Transformations

When you have extremely non-normal data, it will influence your regressions in SPSS and AMOS. In such cases, if you have non-Likert-scale variables (so, variables like age, income, revenue, etc.), you can transform them prior to including them in your model. Gary Templeton has published an excellent article on this and created a YouTube video showing how to conduct the transformation. He also references his article in the video.

Linearity

Linearity refers to the consistent slope of change that represents the relationship between an IV and a DV. If the relationship between the IV and the DV is radically inconsistent, then it will throw off your SEM analyses. There are dozens of ways to test for linearity. Perhaps the most elegant (easy and clear-cut, yet rigorous), is the deviation from linearity test available in the ANOVA test in SPSS. In SPSS go to Analyze, Compare Means, Means. Put the composite IVs and DVs in the lists, then click on options, and select "Test for Linearity". Then in the ANOVA table in the output window, if the Sig value for Deviation from Linearity is less than 0.05, the relationship between IV and DV is not linear, and thus is problematic (see the screenshots below). Issues of linearity can sometimes be fixed by removing outliers (if the significance is borderline), or through transforming the data. In the screenshot below, we can see that the first relationship is linear (Sig = .268), but the second relationship is nonlinear (Sig = .003).

- If this test turns up odd results, then simply perform an OLS linear regression between each IV->DV pair. If the sig value is less than 0.05, then the relationship can be considered "sufficiently" linear. While this approach is somewhat less rigorous, it has the benefit of working every time! You can also do a curve-linear regression ("curve estimation") to see if the relationship is more linear than non-linear.

Homoscedasticity

VIDEO TUTORIAL: Plotting Homoscedasticity

VIDEO TUTORIAL: Plotting Homoscedasticity Encyclopedia of Research Design, Volume 1 (2010), Sage Publications, pg. 581

Encyclopedia of Research Design, Volume 1 (2010), Sage Publications, pg. 581- Simple and thorough explanation: Heteroscedasticity in Regression Analysis

Homoscedasticity is a nasty word that means that the variable's residual (error) exhibits consistent variance across different levels of the variable. There are good reasons for desiring this. For more information, see Hair et al. 2010 chapter 2. :) A simple way to determine if a relationship is homoscedastic is to do a simple scatter plot with the variable on the y-axis and the variable's residual on the x-axis. To see a step by step guide on how to do this, watch the video tutorial. If the plot comes up with a consistent pattern - as in the figure below, then we are good - we have homoscedasticity! If there is not a consistent pattern, then the relationship is considered heteroskedastic. This can be fixed by transforming the data or by splitting the data by subgroups (such as two groups for gender). You can read more about transformations in Hair et al. 2010 ch. 4.

Schools of thought on homoscedasticity are still out. Some suggest that evidence of heteroskedasticity is not a problem (and is actually desirable and expected in moderated models), and so we shouldn't worry about testing for homoscedasticity. I never conduct this test unless specifically requested to by a reviewer.

Multicollinearity

VIDEO TUTORIAL: Detecting Mulitcollinearity

VIDEO TUTORIAL: Detecting Mulitcollinearity

Multicollinearity is not desirable. It means that the variance our independent variables explain in our dependent variable are are overlapping with each other and thus not each explaining unique variance in the dependent variable. The way to check this is to calculate a Variable Inflation Factor (VIF) for each independent variable after running a multivariate regression. The rules of thumb for the VIF are as follows:

- VIF < 3: not a problem

- VIF > 3; potential problem

- VIF > 5; very likely problem

- VIF > 10; definitely problem

The tolerance value in SPSS is directly related to the VIF, and values less than 0.10 are strong indications of multicollinearity issues. For particulars on how to calculate the VIF in SPSS, watch the step by step video tutorial. The easiest method for fixing multicollinearity issues is to drop one of problematic variables. This won't hurt your R-square much because that variable doesn't add much unique explanation of variance anyway.

For a more critical examination of multicollinearity, please refer to: